Stanford University’s Human-Centered Artificial Intelligence

New Stanford tracker analyzes the 150 requirements of the White House Executive Order on AI and offers new insights into government priorities.

Published Nov 16, 2023

By Caroline Meinhardt, Christie M. Lawrence, Lindsey A. Gailmard, Daniel Zhang, Rishi Bommasani, Rohini Kosoglu, Peter Henderson, Russell Wald, Daniel E. Ho

On October 30, President Biden signed Executive Order 14110 on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence (“the EO”). As we explained in our initial analysis, the EO is worth celebrating as a significant step forward in ensuring that the United States remains at the forefront of responsible AI innovation and governance. But there is still much to do and the federal government’s success in implementing the EO’s extensive AI policy agenda will prove whether we celebrated too soon.

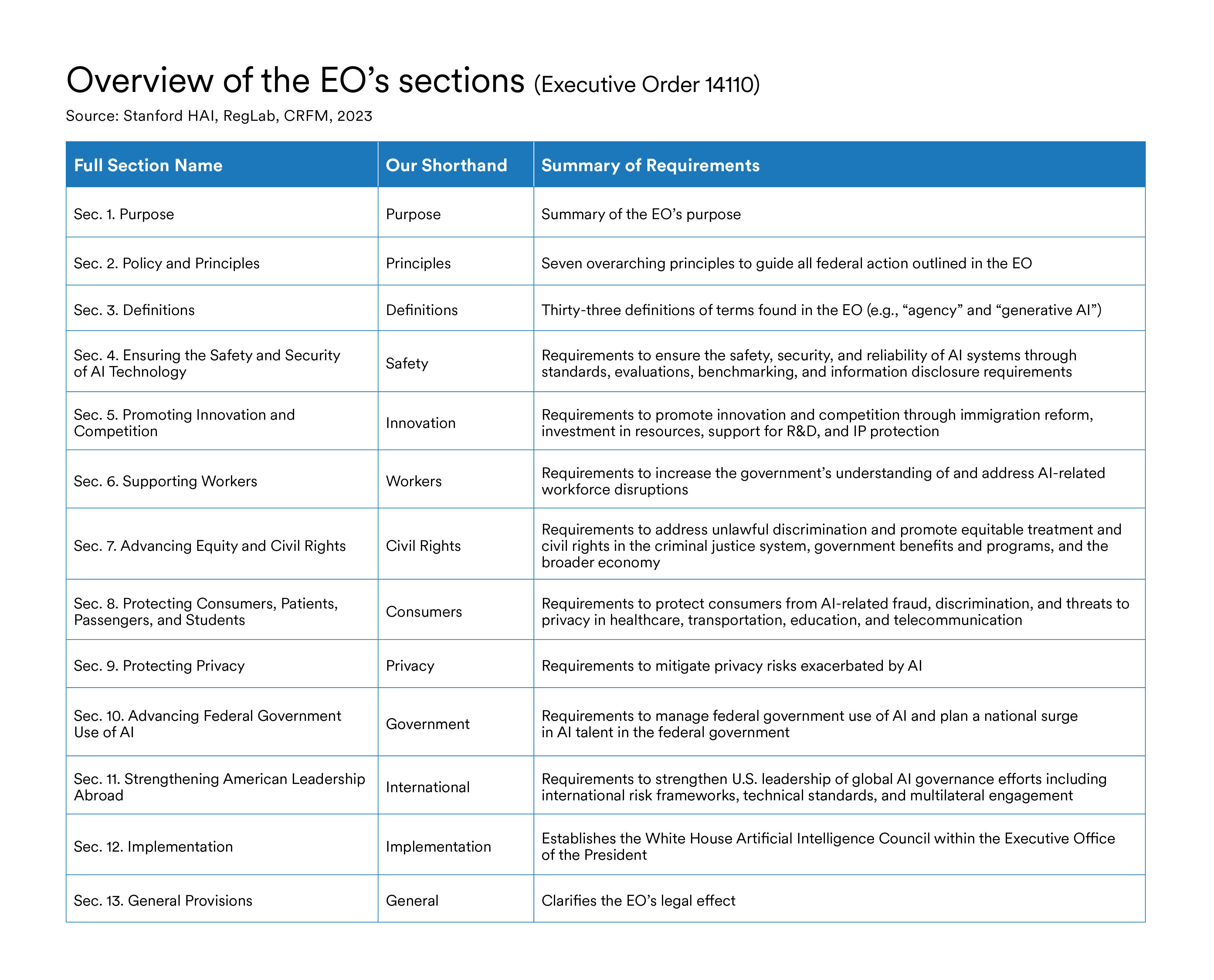

To follow and assess the government’s actions on AI, we now publish the Safe, Secure, and Trustworthy AI EO Tracker (“AI EO Tracker”)—a detailed, line-level tracker of the 150 requirements that agencies and other federal entities must now implement, in some cases, by the end of this year. It specifies key information for each requirement, including which EO section the requirement is located in; the policy issue area the requirement addresses; the government stakeholders responsible for and supporting its implementation; related regulations, laws, and initiatives; the “type” of requirement (i.e., whether it is time-boxed, open-ended, or ongoing); the deadline; and the presence of public consultation or reporting requirements.

Dive into the Safe, Secure, and Trustworthy AI EO Tracker

The AI EO Tracker builds upon prior research by the Stanford Institute for Human-Centered AI (HAI) and the RegLab to analyze the federal government’s implementation of AI-related legal requirements. Our initial December 2022 tracker and methodology (and the accompanying paper) for assessing the federal government’s implementation of three other AI-related executive orders and legislation shed light on the accomplishments and challenges the federal government faces in realizing AI policies. With increased transparency through the tracker—and the heightened pressure this transparency allowed—we began to observe more agencies fulfilling requirements. Assessing implementation will be similarly important for this EO to ensure the federal government fulfills the most comprehensive AI-related executive order to date.

Going forward, the AI EO tracker will be updated periodically. We hope this living document will allow policymakers, scholars, and others to track whether the responsible government entities are implementing these tasks and meeting their deadlines. Additionally, a review of the tracker in its current state also already reveals notable insights into the breadth, urgency, and priorities of the EO. We highlight these insights below.

Expansive Scope But Clear Policy Areas of Priority

The EO is remarkable in how comprehensively it covers a wide range of AI-related issue areas. By our count, it places on federal entities a total of 150 requirements—from developing guidelines or frameworks to conducting studies, creating task forces, issuing recommendations, implementing policies, and, where appropriate, engaging in rulemaking.

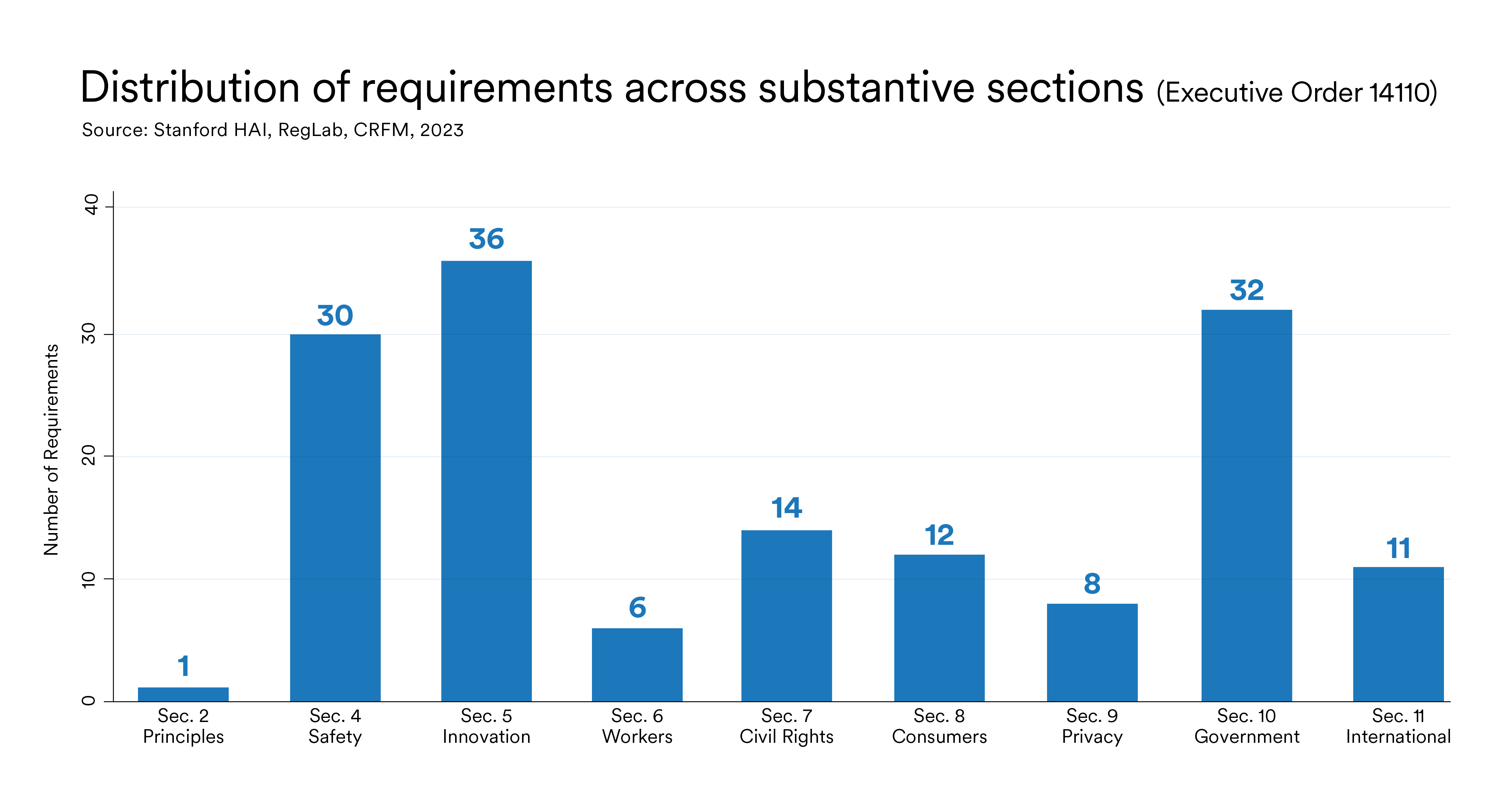

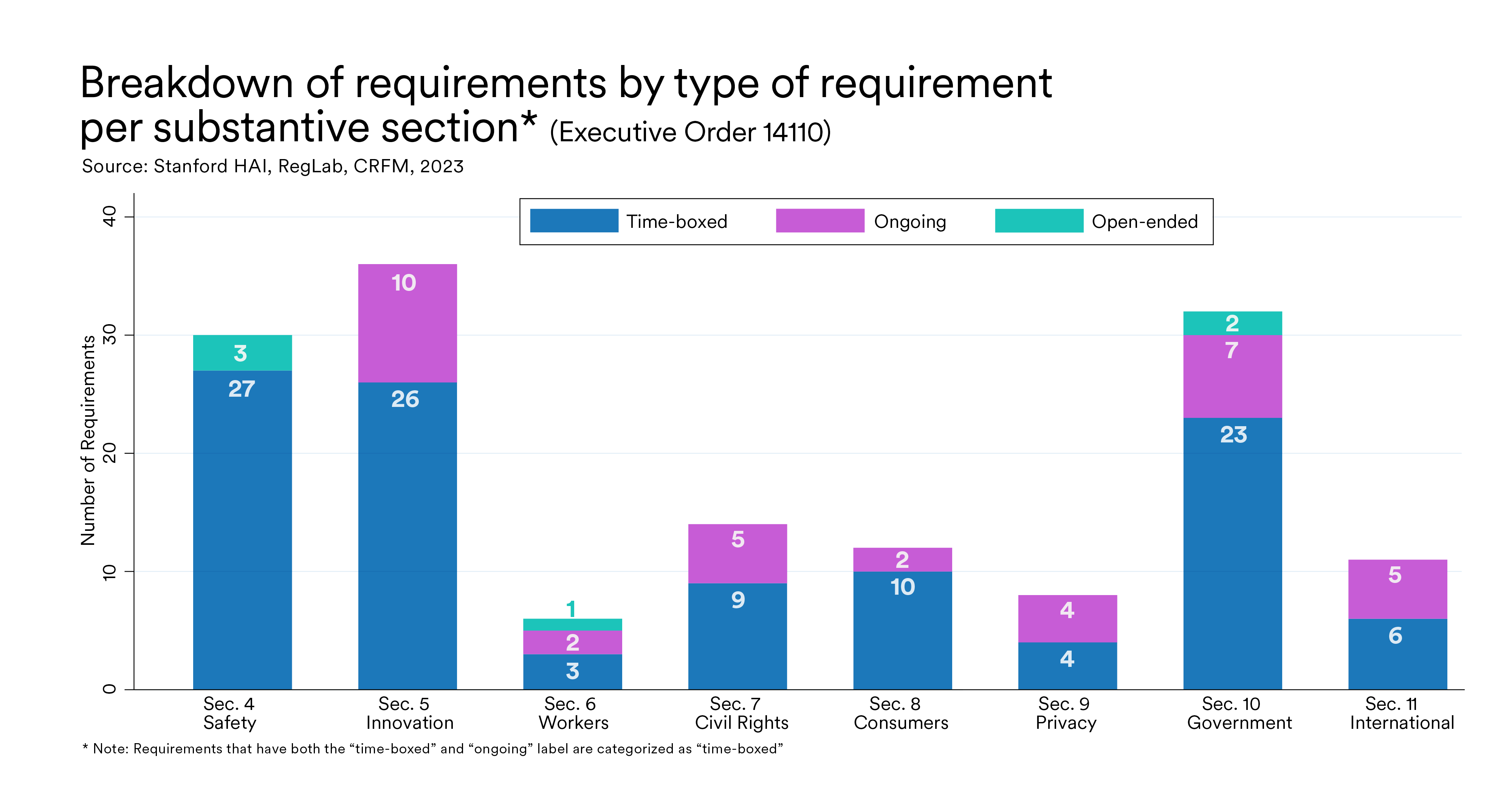

Despite its broad scope, certain AI-related risks and policy areas have a greater number of distinct requirements. Three out of the nine sections that contain substantive requirements—Section 4 (Safety), Section 5 (Innovation), and Section 10 (Government)—together make up around two thirds of all requirements. Raw counts, of course, do not paint the full picture, since requirements vary greatly in terms of their scale and complexity.

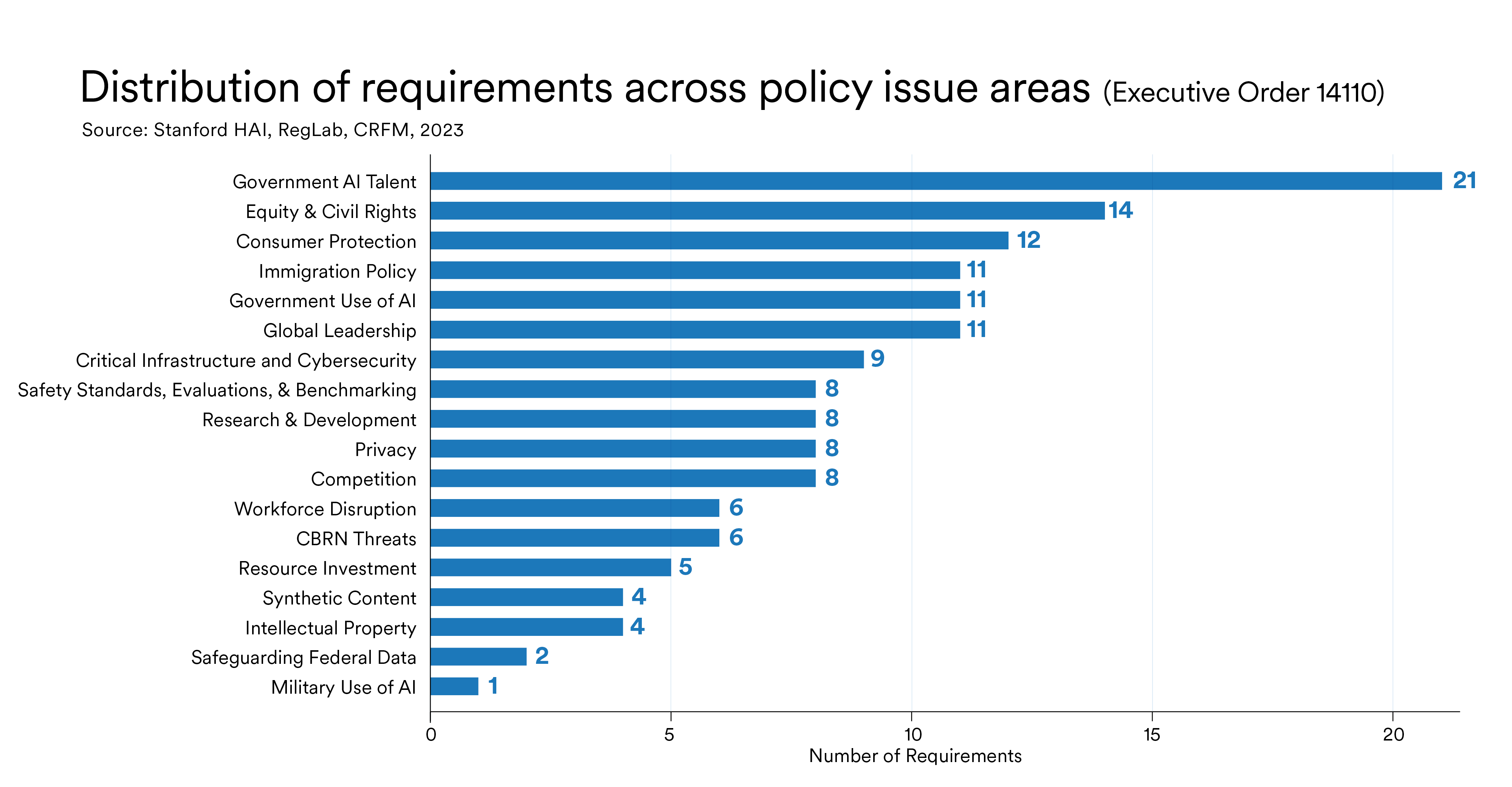

Each section includes requirements covering diverse topics, so we further analyzed the requirements using our own, more granular categorization of the policy issue areas. Based on our policy issue labeling (see the third column of the tracker), attracting AI talent to the federal government overwhelmingly has the greatest number of discrete requirements. Section 10 (Government) lists 21 requirements (or 14 percent of all requirements) that aim to improve the hiring and retention of AI and AI-enabling talent in the federal government. This reflects an acute recognition that the federal government as of now still lacks the broad and in-depth technical AI expertise required to advance the government’s AI efforts—including the very tasks outlined in this EO. The sheer number of requirements may also reflect the difficulty with realizing a “Federal Government-wide AI talent surge”—a vast morass of confusing hiring authorities and procedures requires many actions to overhaul and streamline federal hiring. Federal agencies and departments must implement these requirements swiftly and thoughtfully to avoid struggling to meet the longer term requirements put forward in this EO, many of which require deep AI expertise.

Addressing AI safety, security, and reliability concerns is another clear focus. Requirements outlined in Section 4 (Safety) that are related to safety standards, evaluations, and benchmarking; critical infrastructure and cybersecurity; as well as chemical, biological, radiological, and nuclear (CBRN) related risks—when grouped together—make up 23 requirements (or 15 percent of all requirements) in the EO. They reflect the uptick in concerns about longer term national security risks, and particularly bioweapons risk, in recent AI policy debates. Other policy issues that receive significant attention in the EO include addressing equity and civil rights, protecting consumer rights, reforming immigration policy (in order to attract AI talent to the United States), managing federal government use of AI, and strengthening U.S. global leadership in AI governance.

A Whole-of-Government Effort

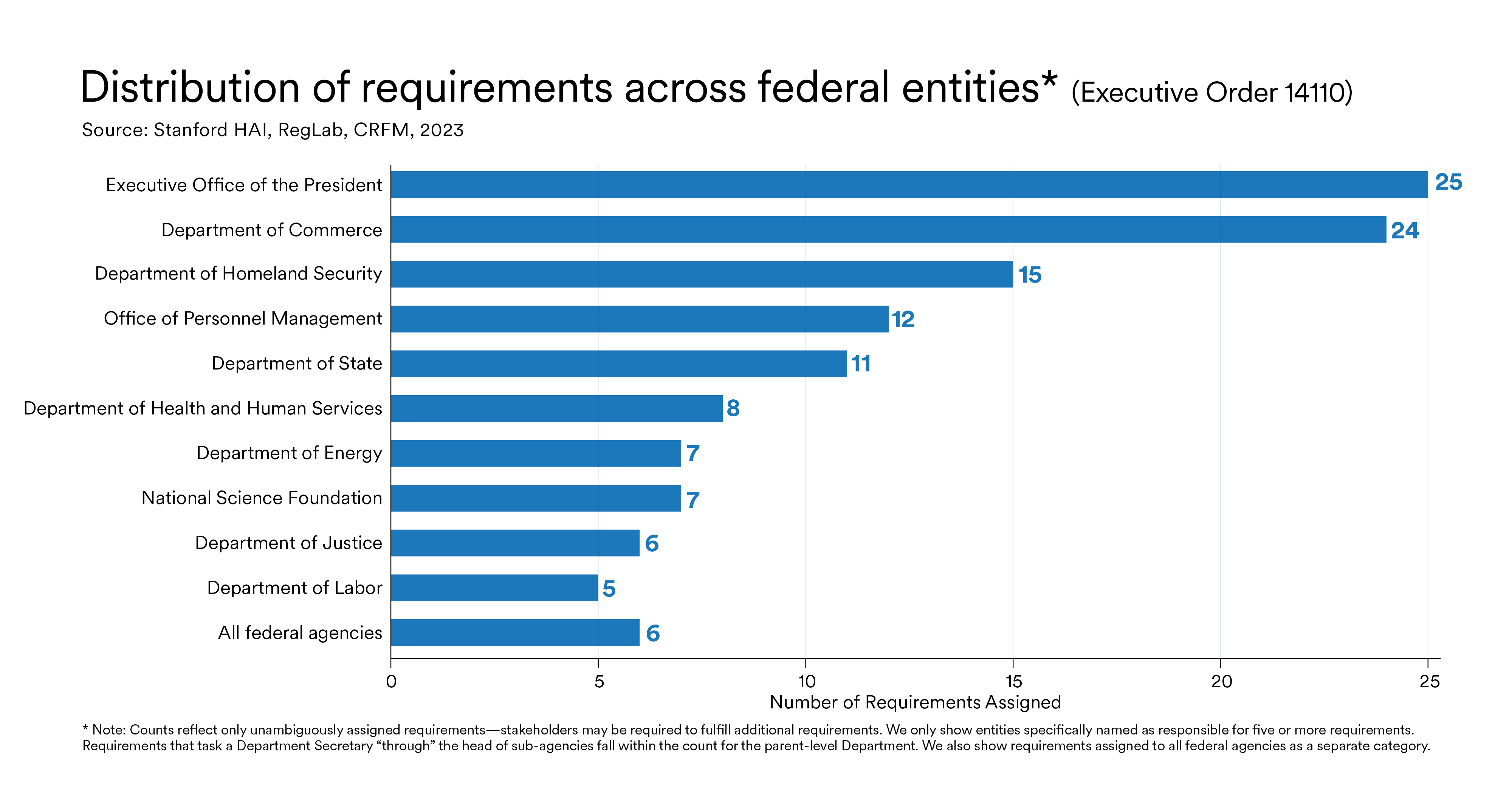

The EO assigns the main responsibility for tasks to more than 50 unique federal agencies or federal entities, with many more agencies and entities providing supporting functions.

With 25 and 24 requirements respectively, the federal entities within the Executive Office of the President (EOP) and the Department of Commerce are by far responsible for the most tasks. The EOP is of course not a monolith and various agencies (e.g., Council of Economic Advisors), senior officials (e.g., Assistant to the President for National Security Affairs), permanent councils (e.g., Chief Data Officers Council), and federal advisory committees (e.g., President’s Council of Advisors on Science and Technology) have distinct mandates. Critically, the Director of the Office of Management and Budget (OMB), an agency within the EOP, is responsible for leading the implementation of 12 requirements (or 48 percent) of the tasks that fall to the EOP. Other agencies that have long to-do lists ahead of them include the Department of Homeland Security, the Office of Personnel Management, and the Department of State. This division of responsibility aligns with the policy issue areas that are among the top focus areas of the EO, as identified above.

That said, responsibilities are widely spread across a whole range of agencies and other federal actors. Thirteen requirements are to be led by all federal agencies or a broader group of unspecified federal agencies (e.g., “all agencies that fund life-sciences research”). This indicates that implementing the EO’s vision for AI governance will require extensive collaboration and coordination between nearly all corners of the government. The long lists of supporting stakeholders who are explicitly called out as having coordinating, consulting, or implementing functions for many of the EO’s requirements also reflects this whole-of-government approach.

The Clock is Ticking

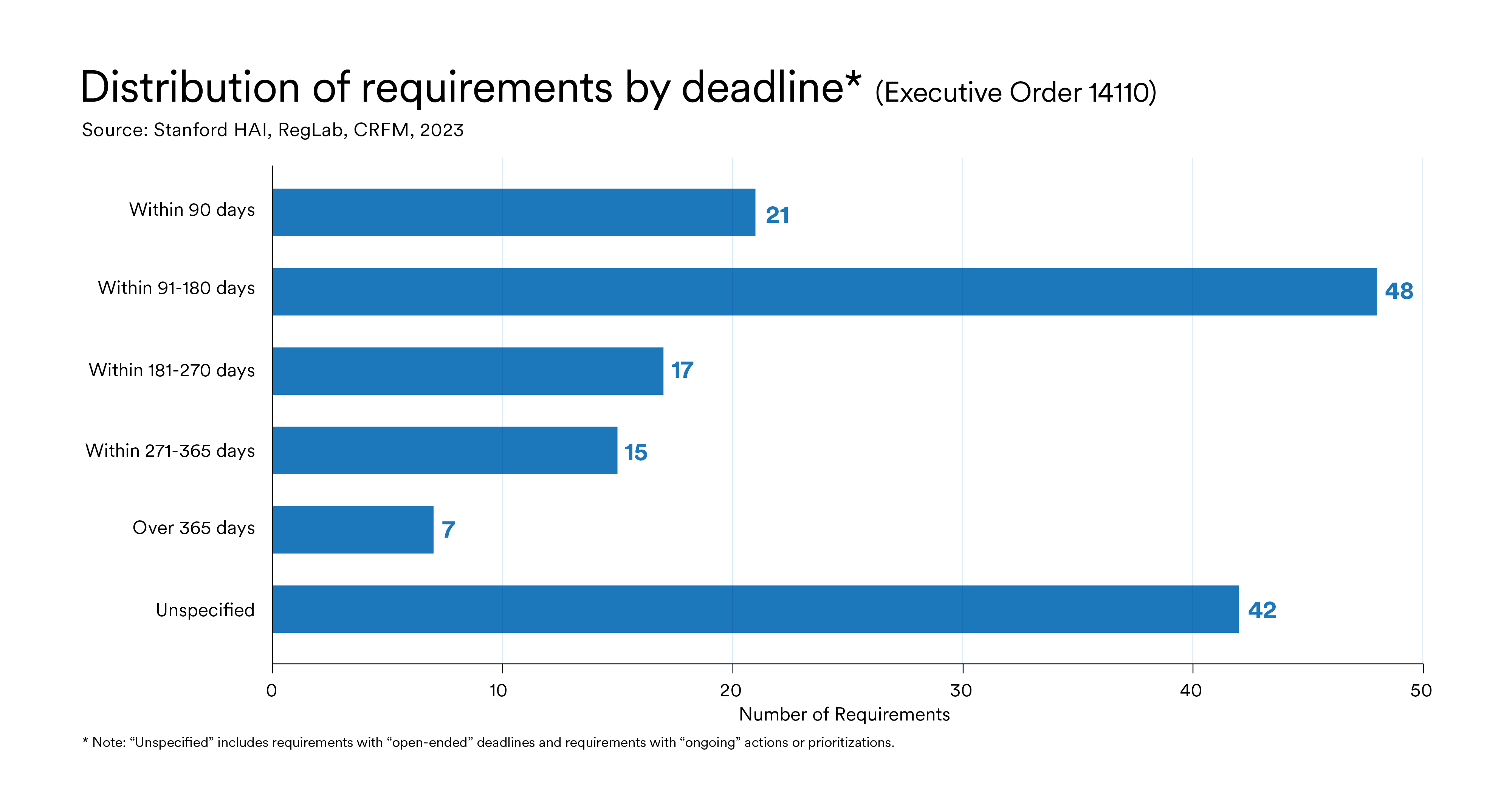

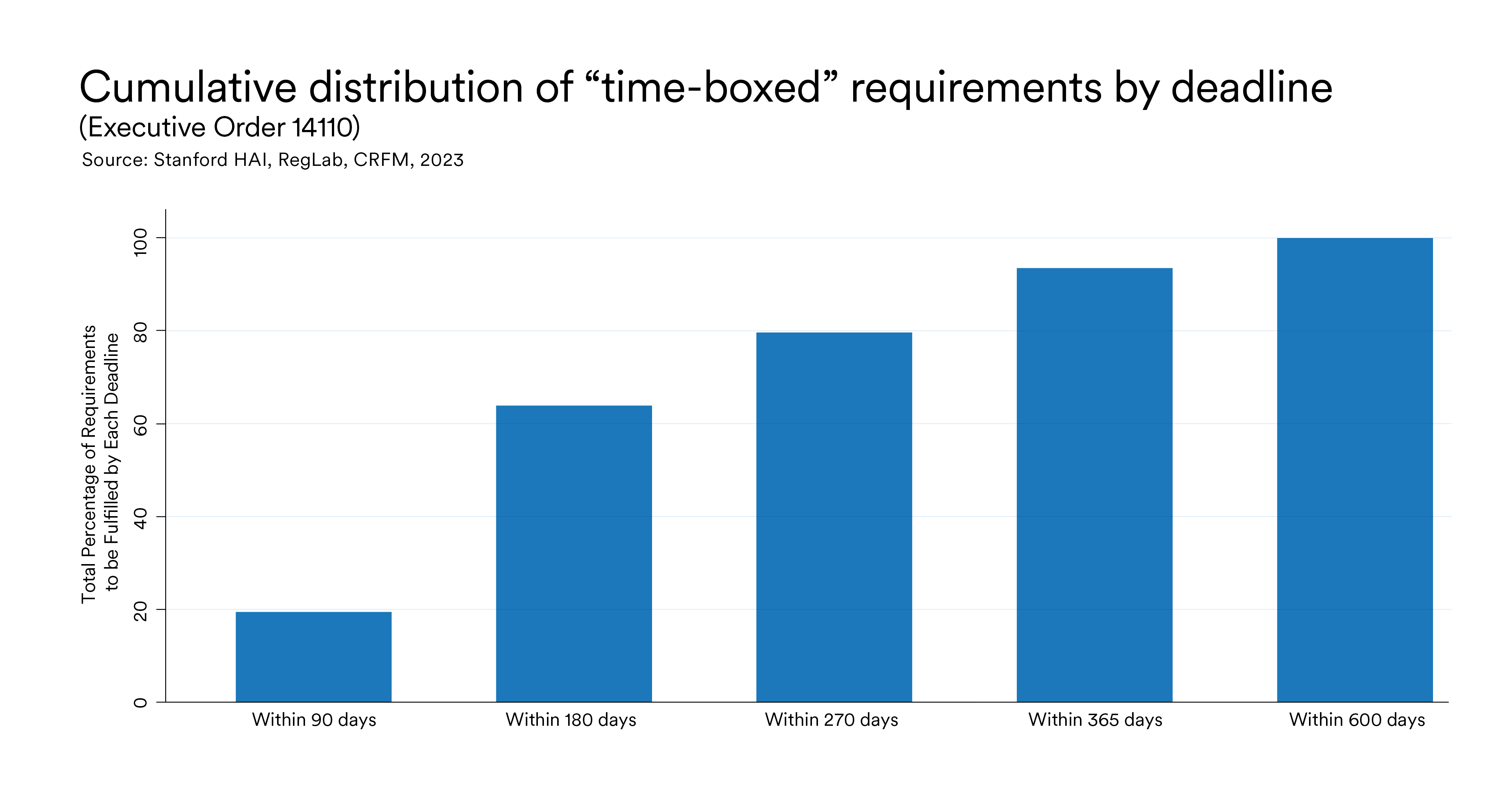

One of the most eye-catching features of the EO is the urgency with which it demands action to implement the federal government’s AI policy agenda. For the vast majority of requirements (72 percent), it sets ambitious “time-boxed” deadlines, meaning that the actions are required to be completed by a specified date. Of these deadlines, 94 percent fall within a year (i.e., by October 29, 2024) and nearly one fifth fall within 90 days (i.e., by January 28, 2024). Ten of those requirements will need to be implemented even sooner—before the end of the year.

These quick turnaround times may reflect the fact that many of the tasks assigned to federal entities are already underway. At the same time, they may reflect a push to make as much progress as possible before the end of a first term, as a presidential transition could lead to revocation of EOs.

As much as these strict deadlines speak volumes, so too does their absence: 42 requirements (28 percent) do not have specified deadlines. The proportion of time-boxed requirements within each section may reflect the different nature of the issue areas addressed.

For example, Section 4 (Safety), not only contains the largest number of requirements, but also boasts the highest percentage (90 percent) of time-boxed requirements. This may illustrate the urgency with which the White House wants to gather necessary information to assess and address, as appropriate, AI-related safety and security risks.

By contrast, 50 percent of requirements in Section 6 (Workers) and Section 9 (Privacy) are time-boxed, and 64 percent of requirements in Section 7 (Civil Rights) are time-boxed. The other requirements are mostly “ongoing” requirements (i.e., demanding regular, often annual, or continuous action instead of a specific deliverable or concrete outcome) and occasionally “open-ended” requirements (i.e., mandated actions without a specific target date for completion), which are harder in nature to track. The higher proportion of “ongoing” requirements in Sections 9 (Privacy), 11 (International), 7 (Civil Rights), 5 (Innovation), and 10 (Government) evince the need for continuing, rather than discrete, engagement to influence such policy areas.

While the relative lack of time-boxed requirements may not necessarily affect implementation, our past research has shown that, on average, time-boxed requirements put forward in prior AI-related EOs are implemented—or at least, can be publicly verified as implemented—at a considerably higher rate than requirements without deadlines. Tasks without specific deadlines, while initially written to give flexibility to a federal entity, can also appear to be vague in nature and their implementation may be difficult to track. Such “ongoing” or “open-ended” tasks may include references to “consider” adopting new guidance documents, “prioritize” financial and other kinds of support for policy goals, and “streamline” internal processes.

Conclusion

The EO is ambitious in its scope, but the distribution of requirements also shows that the Biden Administration has made pragmatic decisions about which policy issue areas require strict deadlines to achieve urgent outcomes and which areas are so complex that they require ongoing engagement and iteration. We hope this AI EO Tracker and statistics related to the distribution of wide-ranging requirements will provide a useful tool to promote successful implementation of these critical priorities for the nation’s approach to AI.

Caroline Meinhardt is the policy research manager at Stanford HAI. Christie M. Lawrence is a concurrent JD/MPP student at Stanford Law School and the Harvard Kennedy School. Lindsey A. Gailmard is a postdoctoral scholar at Stanford RegLab. Daniel Zhang is the senior manager for policy initiatives at Stanford HAI. Rishi Bommasani is the society lead at Stanford CRFM and a PhD student in computer science at Stanford University. Rohini Kosoglu is a policy fellow at Stanford HAI. Peter Henderson is an incoming assistant professor at Princeton University with appointments in the Department of Computer Science and School of Public and International Affairs. He received a JD from Stanford Law School and will receive a PhD in computer science from Stanford University. Russell Wald is the deputy director at Stanford HAI. Daniel E. Ho is the William Benjamin Scott and Luna M. Scott Professor of Law, Professor of Political Science, Professor of Computer Science (by courtesy), Senior Fellow at HAI, Senior Fellow at SIEPR, and Director of the RegLab at Stanford University.

Stanford HAI’s mission is to advance AI research, education, policy and practice to improve the human condition. Learn more.